Web Scraping with Python

Web scraping is a automatic method to obtain large amounts of data from the websites. Suppose we have to obtain some information from a website, usually what we did, is to start copy paste the data from the website to our file, but if we practically think, this method is quite cumbersome. And suppose if you are into data analysis, big data, machine learning or even AI projects, chances are you are required to collect data from various websites and there comes the concept of web scraping.

Why Python?

Python is very commonly used in manipulating and working with data due to its stability, extensive statistical libraries and simplicity.

Prerequisites

Before we begin , please set up Python environment on your machine. Head over to their official page to install if you have not done so.

We will also be installing Beautiful Soup and Request modules.

Getting Started

Firstly make sure you have installed the python packages which are required for the scraper to work.

1

pip install beautifulsoup4

1

pip install requests

After the installations, import these to the python file by running-

1

2

import requests

from bs4 import BeautifulSoup

Now we need to create a variable of the URL of the website we are trying to scrape off the data

1

URL= "-----url to be pasted-----" #Created variable with name 'URL'

Create the get method to requests module

1

req= requests.get(URL)

After that create a instance of the BeautifulSoup as

1

soup= BeautifulSoup(req.______, 'type of parser')

In the above line of code, we have to mention the stuff that we want to scrape from the website ie

- req.content can be used to get the raw html content.

- req.text can be used to get all text of the page.

- req.headers can be used for the header in that page.

And the type of parser , that can be used are-

- html.parser

- htmlparser2

- html5lib

- lxml

and many more.

Install any of above listed HTML parser by running-

1

pip install '______' # HTML Parser name

Now you can print the content you parsed by using print function

1

print(soup.prettify()) #This prettify() method will turn a Beautiful Soup parse tree into a nicely formatted Unicode string.

Tadda, you have successfully scraped the data from the website you want. Now, we can use any useful data from the HTML content. The soup object contains all the data in the nested structure which could be programmatically extracted.

This contains the code if you need and a beautiful example of Web scraping, have a look and don’t forget to star this repo :P

Future Article

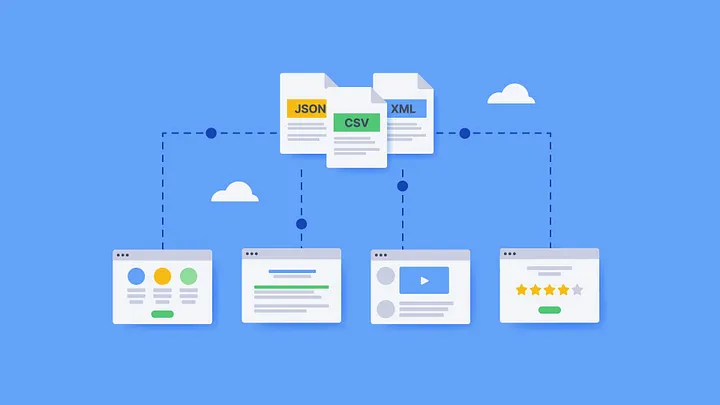

On the further more advanced features in web scraping like Automatic scraping, Compile the huge data into readable formats like PDF’s, DOC’s, Tables, Lists etc.

Web Scraping with Go language because of its speed.

If anyone finds these useful, feel free to share this, and comment down the issues you are facing.

Happy Hacking :)

Originally posted on Medium here